The History of Modern Web Development

- – 12 minutes read – HistoryModernWeb DevelopmentIn 2023 and in the preceding years, we saw the resurgence of meta-frameworks for all the big frameworks and libraries used in modern frontend development. One of these frameworks with one of the largest followings is NextJS, which is built on top of React, an immensely popular stack to build modern frontend applications and sites with. This framework, and many like it which includes but is not limited to: SvelteKit, NuxtJS, Remix, AnalogJS, NestJS, et cetera, are all part of the same movement, called “Server Side Rendering”. This is a solution to make frontend JavaScript apps get pre-rendered on the server. On every request, they fill the page with up-to-date data and send it off to the requesting client, which is usually a browser. This helps dynamic web pages load faster, be better searchable for search machines and improve the overall user experience.

Those who have been in the Web Development business for 15 or 20 years may have noticed something. This seems like a revival of how websites were built in the 2010s, where JavaScript started playing a bigger role and web pages became more interactive. You may have seen the posts saying “This is just PHP all over again”, and the comparison is certainly striking!

Let’s take a dive through 20 years of web development and how it came to be, what earlier techniques inspired the current generation and where we might be heading next.

Late 90s

In the 90s, as the internet was growing in popularity, most web pages were simple and static. Raw HTML is shipped to the browser. The browser, at that time Internet Explorer or Netscape, would then request some CSS and assets, usually images, then gather all that and present the now styled HTML page.

A user would see a collection of links and images that could be clicked. Whether they were browsing articles or shopping, the interface consisted mainly of these links and images. The user clicks a link and the browser retrieves a new page. Theserver responds with the requested HTML, the HTML is parsed and the browser reaches out for more downloadable assets. And the cycle continues.

This is also the era of GeoCities (1994) where people could host their basic HTML, CSS and images for everyone to see.

In 1995, PHP is released and quickly gains traction among users as it is particularly easy to pick up, learn, and run, so soon lots of sites would move to this more dynamic approach. Together with alternatives like JSP, ASP and Adobe’s ColdFusion.

This release gives rise to the introduction of the first CMS’s (Content Management System), which makes it even easier for people to pick up and build a dynamic site. This makes PHP basically the first server-side rendering tool that gained wide popularity. It would take different HTML templates and populate these with user information, relevant & up-to-date data like post information and amount of views. It would then render the resulting HTML page on-demand and the final result would be served to the browser.

The web is rapidly increasing the value it could deliver to users. In a short time span, dynamic sites like the example above started popping up. With the introduction of JavaScript (December 1995, by Brendan Eich of Netscape), website creators, developers and hobbyists start to add a bit of interactivity to their pages. In 1996, Netscape submits this new scripting language to the Ecma International committee where it would be expanded upon and made available to any browser vendor that is willing to adopt this new scripting paradigm.

Early 2000s

In the early years of 2000, the Internet starts to boom and explosively grow in popularity and usage. As more and more regular people get access to computers and the Internet, so does the demand and need for web pages and services grow.

But in these early days, scripting is still very uncommon. The content might be dynamic, but the pages themselves are fairly static and offer little interactivity.. With the introduction of Firefox (Mozilla, 2004), the browser market starts to shift and this brings a new wave of slightly more interactive webpages.

These are also the years of MySpace (2003, ColdFusion) and Facebook (2004, PHP), taking the web by storm. In The Netherlands, we had our own alternative: Hyves (2004, PHP/ASP). These platforms all pop up around the same time as more people start to use the Internet on a daily basis. In the same time period, out-of-the-box tools like phpBB (2000) and WordPress (2003) also become available, making it much easier for anyone interested in starting to build a website to do so.

This is the beginning of webpages figuring out that interactive websites are a lot more engaging, and that clicking a vote button or submitting a post shouldn’t hijack your complete web experience but keep you in the same place and update it live. Perhaps it’s also a better user experience if the page helps you correct mistakes with some rudimentary validation before sending a form. With some scripting, you could send that click on the upvote button to a different place to save it. The next time you loaded the page, the server would get the latest data and render your upvote. With some more scripting, the page could eagerly update the vote count so users wouldn’t even have to refresh their page anymore. Adding a comment and directly showing it on your page would be a close next step.

In 2004, Google released Gmail to the public, which was one of the first fully interactive interfaces. This inspired a much wider audience to go and explore interactivity on the web, which is only just beginning.

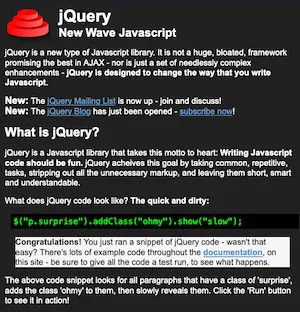

jQuery

On the 26th of August 2006, jQuery is released to the world. jQuery is a DOM manipulation and querying library, which is a fancy way of saying it allows developers to find elements on an HTML page like input fields and buttons more easily and manipulate those same elements. It also provides a way to respond to events and makes it a lot easier to work with “AJAX” (Asynchronous Javascript and XML), which has been part of Internet Explorer since 1999, but didn’t become part of the W3C’s XMLHttpRequest object Web Standard until 2006.

jQuery then almost becomes a default for modern web pages as it makes adding some interactivity to a page easy. As of writing, it is still claimed to be running on 77% of web pages according to Wikipedia.

Early 10s

Fully interactive pages are slowly becoming the norm, and the movement to what will later be called “web apps” is slowly getting under way. With the browser technology getting better, the introduction of HTML5 (2012) and the compatibility gap slowly closing between browsers, the road is now paved for more intricate and complex web applications.

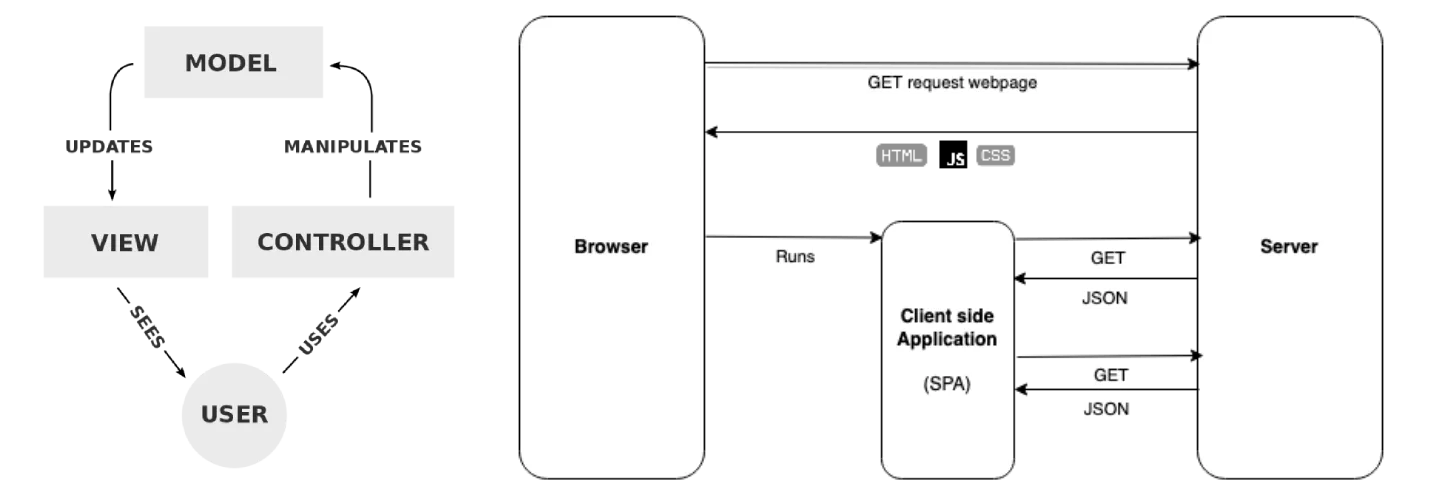

In comes AngularJS (2010), a library that is written by Misko Hevery and then adopted by Google. In that same year, both KnockoutJS and BackboneJS (2010) are also released which share many of the same concepts amongst each other, of course with slight differences in approach. These libraries popularized the “MVC” model, or “Model-View-Controller”, which is a concept that helps you structure your apps and split your domain models from your view logic. This is also the time where the abbreviation “SPA” or Single-Page Application is gaining in popularity as these types of applications become more mainstream.

In 2013, React is released by Facebook to the public. This takes the web by storm again and gains even more popularity with an easier learning curve. Although in the early days of AngularJS and KnockoutJS, hybrid combinations of page and application were still quite common, where a page would retrieve some data for rendering on the server-side, package it with a AngularJS or KnockoutJS app, and send it over to the browser. The browser then has to start the client-side application and use all the data that was sent over to build some application state.

This idea is slowly being overtaken by a new idea, with a singular app that would just do it all. Retrieve a bunch of data, render a new set of components, navigation, et cetera. For React this means the growth of a massive community exchanging ideas and libraries, some of which growing to massive sizes like React-router (June, 2014) and Redux (June, 2015).

In 2014, Angular 2+ is announced at the ng-Europe conference, and 6 months later a developer preview is released (April 2015). This complete overhaul in approach from AngularJS turns Angular into a full framework that has a clear opinion and comes “batteries-included”. Angular comes with a bit of a steeper learning curve, by default together with TypeScript, and rethinks a lot of concepts developers have gotten used to from AngularJS.

2014 also marks the year a new contestant shows up on the JavaScript framework market: Vue.JS, a mix of all the good parts of AngularJS, as stated by the author, used to create a new modern lightweight library.

So begins a competition that spans multiple years between what are now the big-3 of JavaScript solutions for building web applications.

Return of SSR

In the years after 2016, we see a resurgence of “Server-Side Rendering” solutions. Angular Universal (May 2016), Next.js (October 2016), Nuxt.js (October 2016) are all released in the same year and enjoy different levels of success and popularity.

SSR is making a comeback because just client-side applications have a few downsides. Specifically in the area of loading speed and indexability. Most apps people are building want to enjoy some form of good Search Engine Optimization (SEO) or be well shareable through Social Media sites. SSR webpages using client-side JS after the initial load are a much better fit for these types of applications, which explains the enjoyed popularity of these meta-frameworks. Most of these meta-frameworks rely on a technique called “Hydration”, where after the page and its assets are fully downloaded, the client-side JavaScript needs to pick up where the server left off for a seamless user experience. This means it has to figure out what state the application and all its relevant components are in, before it can resume functioning as usual. These meta-frameworks built on top of different frontend libraries provide all sorts of different methods of achieving this seamless transition.

In addition to hydration, most modern meta-frameworks also do some form of code splitting, where it identifies which parts of JavaScript are required to start a web application and only ships that code. The rest can then be loaded as it is required by the browser and the application. This speeds up the application start times by only downloading the required JavaScript.

The future

Most recent developments are in that similar space of optimizing how much we send from server to client. Less JavaScript is better and makes the whole experience faster. How many applications really do need that full interactive model that we’ve been building for almost 10 years now?

Astro (April, 2022) and Qwik (September, 2022) seem to be gaining in popularity as the spearhead for “Partial resumability”, basically optimizing front-end client side applications where they ship as little JavaScript to the browser and only download additional JavaScript when the user starts interacting with the page. This is rethinking the “Hydration” strategy we have seen in other SSR solutions.

An even more extreme example is HTMX, which seems to be quite recently released (2022). This completely removes the reactivity model and instead goes back to having server-side rendered HTML, which can then be easily called using events and replaces existing targeted HTML elements like divs.

It seems the trend of fully client-side web applications has passed its peak and less complicated and easier models with a focus on speed and less JavaScript seem to be returning on the rise. In the end, we’re all still using the same browser based JavaScript APIs under the hood, just abstracted away by brilliant frameworks and libraries making developers heaps more productive and creating incredibly cool and complex applications over the last decade.

But does every website need a web app? How much interactivity does a blog really need? Surely there will be a need for full-fledged web applications to enable users to do their work in an efficient manner. Some applications simply have a realtime nature to them, or require a bunch of information coming from different sources to be combined in a smart way. But not every site out there needs that level of interactivity. It depends on what the user needs and how they are supposed to be using said application, not to mention the increased complexity for maintaining such sites.

This is a complex field with many routes to success. Yet I don’t think every website requires the amount of interactivity like we’ve been building for the past 5 years. Not every site needs to be a web app. So many web pages with little interactivity, like an interactive search with filters, could just be a singular component within a server-rendered whole. Of course, the dynamic nature of the data may change the level of interactivity and how much can be pre-rendered. However, I think that we’ve shipped off too much logic to the client to the point where we have to duplicate application logic in both front-end and back-end. This may offer a nice user experience,, but it’s not really required or even demanded by the users.

Perhaps we could do better and be smarter about the level of interactivity our users really need. Other business related requirements, like requiring a full REST or GraphQL API for other applications (like mobile apps), might influence how we want to approach the type of applications we write ourselves, and the complexity and interactivity required.

I personally think the time of taking any idea for the web and making it a client-side web application is over. And perhaps, that’s a good thing.